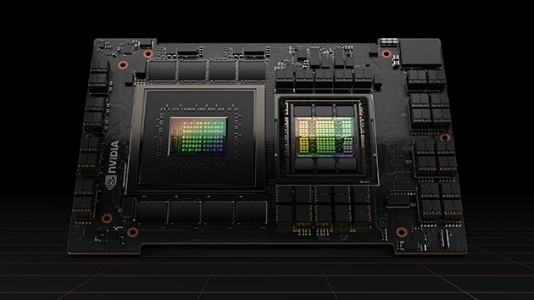

Nvidia's unveils new Blackwell AI 'super chips'

All-conquering chip design firm Nvidia has launched its Blackwell graphic processing units, which are reportedly up to 30x faster than their predecessors.

Nvidia says the Blackwell platform will help organisations build and run real-time generative AI on trillion-parameter large language models at up to 25x less cost and energy consumption than its predecessor.

The news was announced at the Nvidia GTC 2024 developer event. There, Nvidia CEO Jensen Huang described Blackwell as a significant step forward from the previous Hopper platform. He noted that Blackwell contains 208 billion transistors (up from 80 billion in Hopper) across its two GPU dies, which are connected by 10 TB/second chip-to-chip link into a single, unified GPU.

And yet Blackwell is more energy efficient than previous GPUs. Huang claimed it can reduce cost and energy consumption by up to 25x. He gave the example of training a 1.8 trillion parameter model – which would previously have taken 8,000 Hopper GPUs and 15 megawatts of power – but can now be done by just 2,000 Blackwell GPUs consuming just four megawatts.

“For three decades we’ve pursued accelerated computing, with the goal of enabling transformative breakthroughs like deep learning and AI,” said Jensen Huang, founder and CEO of NVIDIA. “Generative AI is the defining technology of our time. Blackwell is the engine to power this new industrial revolution. Working with the most dynamic companies in the world, we will realize the promise of AI for every industry.”

Major enterprises have already committed to building Blackwell-powered systems, with AWS, Google Cloud, Microsoft Azure and Oracle Cloud Infrastructure among those on board.